This post forms part 2 of the series I started in the last post, which focused on using the energy balance over the period of 0-2000m OHC data to estimate sensitivity. As you recall, I noted that using the radiative response over this shorter period actually overestimated temperature sensitivity in the GISS-ER and GFDL CM2.1 model runs, so I wanted to test how the radiative response over this shorter period compares to the “effective sensitivity” in all the CMIP5 runs.

1. Effective Sensitivity in CMIP5 Runs

Note that I am using “effective sensitivity” here in the sense of Soden and Held (2006)…the net radiative response to an increase in surface temperature over a long term scenario (units W/m^2/K). In my specific case, I am using the RCP4.5 scenario runs from the CMIP5 models, which hold fixed a 4.5 W/m^2 anthropogenic forcing change in 2100. In addition to the forcing difference, I use the difference in net radiative imbalance and temperature between the two periods 1860-1880 and 2080-2100.

The data I grabbed from Climate Explorer using a script (you’ll need to register and insert your own e-mail address), which I developed with some help from this Climate Audit post that cut down the learning curve. This is not a complete set of all CMIP5 models available at Climate Explorer at the time, as some seemed to be missing radiation fields and others caused my script to choke, but it is *almost* all of them. Anyhow, here are the diagnosed “effective sensitivities” in the individual model runs (note again that if you were assuming a constant radiative response, you could determine the equilibrium climate sensitivity based on 3.7/effective_sensitivity):

I have not compared these results to Andrews et al. (2012), but if anybody has a copy of this paper I would love to do so. Anyhow, as you can see, each of the model runs are pretty tightly clustered with other runs within the model, which is to be expected. One exception was CESM1-CAM5, but after looking at several of the runs it was clear that they had an offset in the splicing of the beginning of the RCP4.5 run onto the end of the historical run. Looking at this chart again, I’m noticing something suspicious in one of those CCSM4 runs, with 5 runs very closely clustered and then 1 “rogue” one. I will need to check if that is an offset error as well. Anyhow, here is a look at the mean “effective sensitivities” and number of runs for each model:

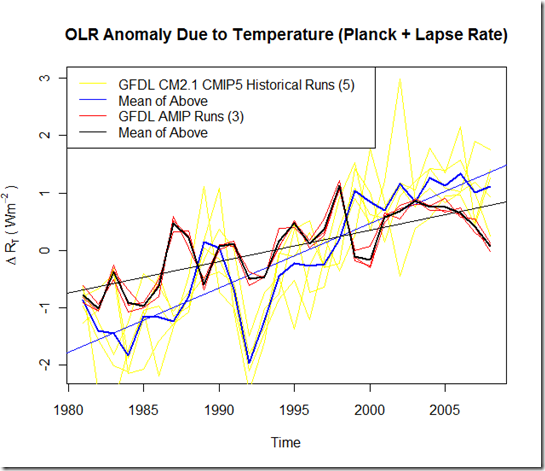

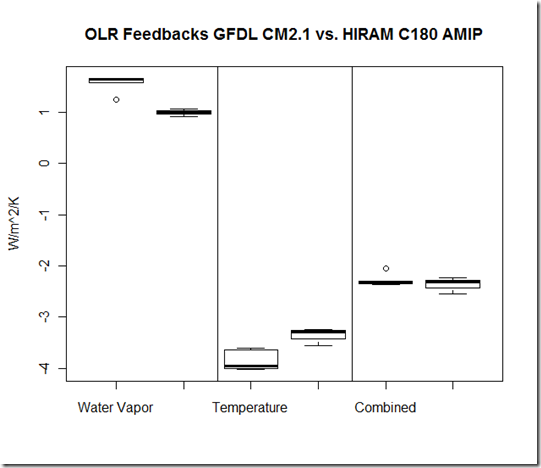

2. Shorter-Term Radiative Response (from last 40 years) in CMIP5 Runs

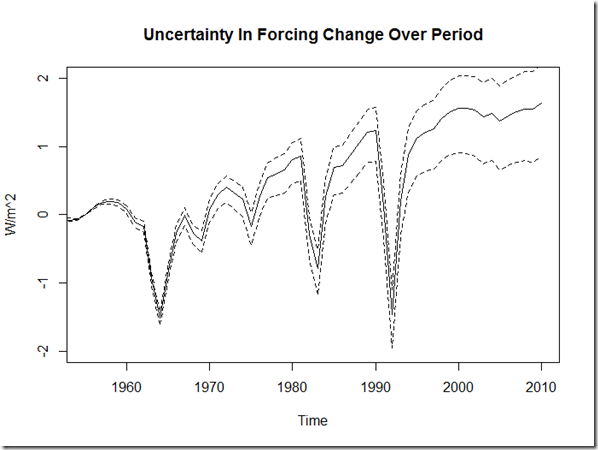

In order to test the method I used in the last post, here we will determine the radiative response based on the difference (in TOA imbalance and surface temperature) between the the 2005-2011 period and the 1965-1974 period. The latter period was determined by finding the best correlation between the resulting radiative response diagnosed for the models and the longer “effective sensitivity” for those same models.

One huge difficultly with determining this radiative response for a model is that the TOA forcing data is simply not available (at least, in my experience). It requires a special fixed SST run to do this calculation***, and I don’t believe this is included in the CMIP5 archive. Thus, I have decided to simply use the GISS forcings for ALL the calculations, and this will cause some slight inaccuracy in the diagnosed radiative response for other models. Nonetheless, here is the radiative response as diagnosed:

For reference, using the GISS estimate for the aerosol forcing (and not the IPCC one, which is of smaller magnitude), my test in the previous post resulted in a –2.4 +/- 0.8 W/m^2/K response over this same period, which is a good deal stronger than most model runs.

***The Forster and Taylor (2006) method uses the inverse of the long-term radiative response to estimate forcing, but we can’t do that here because we are testing the relationship between the shorter-term and long-term response, and the assumption that these would be the same would be begging the question.

3. Relationship between 40 year Radiative Response and Effective Sensitivity

The plot below shows the relationship between the 40-year response and the effective sensitivity. The r^2 value is 0.36, which is pretty strong despite the fact that I have essentially ignored the difference in aerosol forcing between the models.

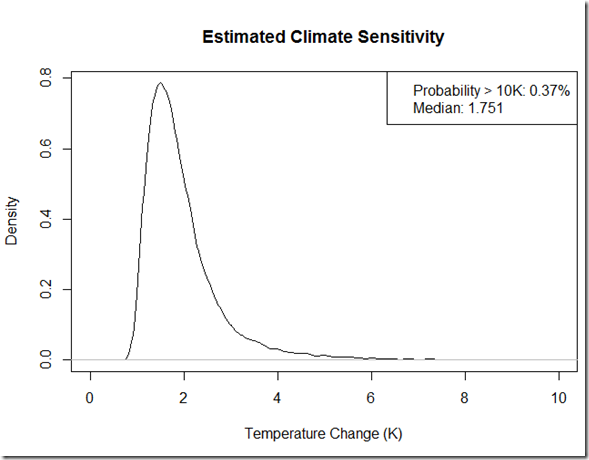

So, what can this knowledge, combined with the previous test, tell us about effective sensitivity of the real world system? The chart below includes red lines showing the least/likely/most radiative response from observations over that same period (–2.4 +/- 0.8 W/m^2/K, again assuming GISS forcings rather than the IPCC):

If we were confident in that regression, our “likely” estimate for “effective sensitivity” would be right around –2.0 W/m^2/K, which would correspond to an ECS of ~ 1.85 K if we assumed a negligible difference between the “effective sensitivity” radiation response and that response over the full time it takes to equilibrate. However, I don’t think much stock can be placed in that regression, given that we have not used particularly accurate forcing data for the individual model aerosols, and the radiative response is well outside the main cluster of models. I think this latter fact is the more interesting qualitatively – there IS a fairly strong underlying relationship between this 40 year radiative response and the longer term “effective sensitivity”, and only 3 model runs of all the model runs looked at here have this radiative response fall within the 2.5%-97.5% uncertainty range as diagnosed from OHC in my last post. Of those, 1 of those “compatible” runs is a rogue CCSM4 run that is almost certainly affected by an offset issue. I am curious about the other 2 models/runs that diverged from the pack as well, but these don’t seem likely to be “rogue” runs because their corresponding effective sensitivities (which would also be affected by an offset issue) are normal. Regardless, given that the modeled aerosol forcings tend to be larger in magnitude than in satellite estimates, this line of evidence would suggest it is even more likely that the effective temperature sensitivity of almost all CMIP5 models is too high.

This presents an additional test to just comparing temperature trends to models, because temperature and radiative imbalance will be negatively correlated if all else is kept equal. So in the event that you get a lower temperature trend in the real world than models due to La Nina conditions towards the end of the period, you should see an increase in TOA imbalance relative to models as a consequence of this unforced cooling, assuming the radiative response between the real world and models are about the same. However, as both the temperature trend AND TOA imbalance trend are smaller than almost all CMIP5 models over this period, La Nina would not serve to explain the situation. This leaves some combination of the following possibilities that I can see: 1) incorrect diagnosis of TOA imbalance from 0-2000m OHC, 2) aerosol forcing greatly exceeds that of GISS (which itself greatly exceeds the IPCC best estimate), 3) some other unknown forcing, 4) too high of effective temperature sensitivity in the CMIP5 models.

Data and Code

The following script, ProcessCMIP5Data.R, accesses the CMIP5 data in my public folder and creates the figures for the above post. HOWEVER, it is quite a few files, and processing will be slow if you run the above turnkey script. Instead, I recommend you download the data to your local machine first, unzip it, and then change “baseURL” in the above script to point to your specific folder you unzipped it into.