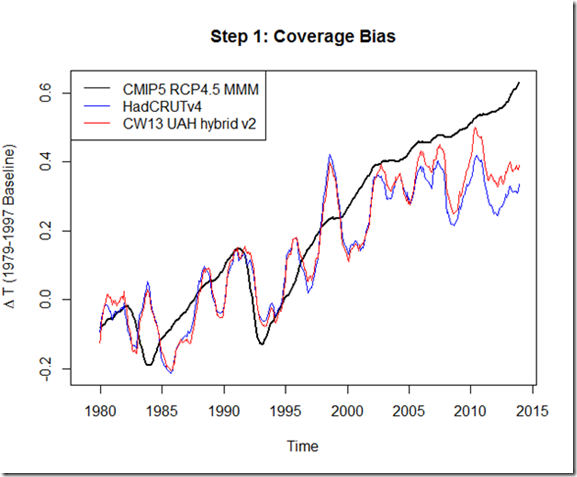

Thanks to commenter Gpiton, who on my last post about attributing the pause, alerted me to the commentary by Schmidt et al (2014) in Nature (hereafter SST14). In the commentary, the authors attempt to see how the properties of the CMIP5 ensemble and mean might change if updated forcings were used (by running a simpler model), and find that the results are closer to the observed temperatures (thus primarily attributing the temperature trend discrepancy to incorrect forcings). Overall, I don’t think there is anything wrong with the general approach, as I did something very similar in my last post. However, I do think that some of the assumptions about the cooler forcings in the "correction" are more favorable to the models than others might choose, and a conclusion could easily be misinterpreted. This is my list of comments that, were I a reviewer, I would submit:

Comment #1: The sentence

We see no indication, however, that transient climate response is systematically overestimated in the CMIP5 climate models as has been speculated, or that decadal variability across the ensemble of models is systematically underestimated, although at least some individual models probably fall short in this respect.

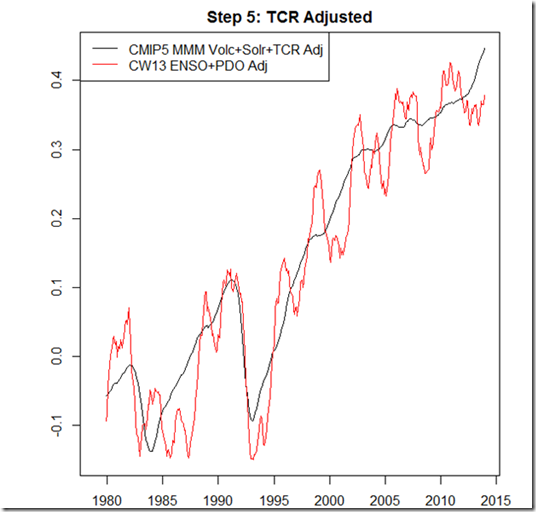

is vague enough to perhaps be technically true while at the same time giving the (incorrect, IMO) impression they have found that models are correctly simulating TCR or decadal variability. It may be technically true in that they find "no indication" of bias in TCR or internal variability, due to the residual “prediction uncertainty”, but this is one of those "absence of evidence is not evidence of absence" scenarios, where even if the models WERE biased high in their TCR there would be no indication by this definition. By the description in the commentary, the adjustments only remove about 60-65% of the discrepancy. The rest of the discrepancy may be related to non-ENSO noise, but it also may be related to TCR bias, and would be what we expect to see if , for example, the "true" TCR was 1.3K (as in Otto et al., 2013) vs. the CMIP5 mean of 1.8K. Obviously, the reference to Otto et al., (2013) might be mistaken by some to suggest an answer/refutation to that study (which used a longer period to diagnose TCR in order to reduce the "noise"), but clearly this would be wrong. Had I been a reviewer, I would have suggested changing the wording: "The residual discrepancy may be consistent with an overestimate of the transient climate response in CMIP5 models [Otto et al., 2013] or an underestimate of decadal variability, but it is also consistent with internal noise unrelated to ENSO, and we thus cannot neither rule out nor confirm any of the explanations in this analysis." Certainly has a different feel, but it essentially communicates the same information, and is much less likely to be misinterpreted by the reader.

Comment #2: Regarding the overall picture of updated forcings, it is worth pointing out that IPCC AR5 Chapter 9 [Box 9.2, p 770] describes a largely different opinion (ERF = ”effective radiative forcing”)

For the periods 1984–1998 and 1951–2011, the CMIP5 ensemble-mean ERF trend deviates from the AR5 best-estimate ERF trend

by only 0.01 W m–2 per decade (Box 9.2 Figure 1e, f). After 1998, however, some contributions to a decreasing ERF trend are missing

in the CMIP5 models, such as the increasing stratospheric aerosol loading after 2000 and the unusually low solar minimum in 2009.

Nonetheless, over 1998–2011 the CMIP5 ensemble-mean ERF trend is lower than the AR5 best-estimate ERF trend by 0.03 W m–2 per

decade (Box 9.2 Figure 1d). Furthermore, global mean AOD in the CMIP5 models shows little trend over 1998–2012, similar to the

observations (Figure 9.29). Although the forcing uncertainties are substantial, there are no apparent incorrect or missing global mean

forcings in the CMIP5 models over the last 15 years that could explain the model–observations difference during the warming hiatus.

(My emphasis). Essentially, the authors of this chapter find a discrepancy of 1.5 * 0.03 = 0.045 W/m^2 over the hiatus, whereas SST14 use a discrepancy of around 0.3 W/m^2, which is nearly 7 times larger! And there does not appear to have been new revelations about these forcings since the contributions to the report were locked down – the report references the "increasing stratospheric aerosol loading after 2000" and "unusually low solar minimum in 2009" mentioned in the commentary. Regarding the anthropogenic aerosols, both the Shindell et al, (2013) and Bellouin et al., (2011) papers referenced by SST14 for the nitrate and indirect aerosol estimates are also referenced in that AR5 chapter, and Shindell was a contributing author to the chapter. This is not to say that the IPCC is necessarily right in this matter, but it does suggest that not everyone agrees with the magnitude of the forcing difference used in SST14.

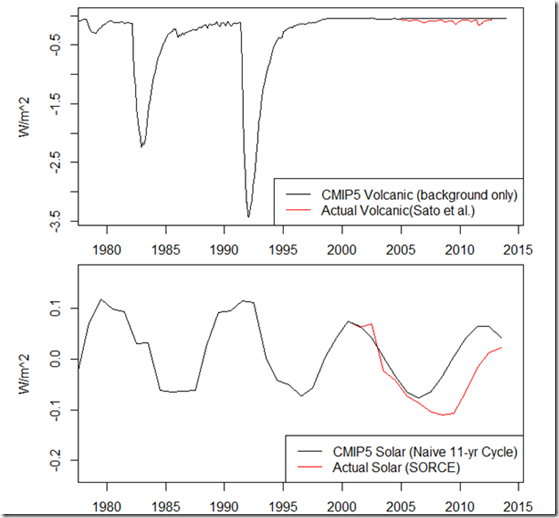

Comment #3: Regarding the solar forcing update, per box 1, SST14 note: "We multiplied the difference in total solar irradiance forcing by an estimated factor of 2, based on a preliminary analysis of solar-only transient simulations, to account for the increased response over a basic energy balance calculation when whole-atmosphere chemistry mechanisms are included."

I would certainly want to see more justification for doubling the solar forcing discrepancy (this choice alone accounts for about 15% of the 1998-2012 forcing discrepancy used)! If I understand correctly, they are saying that they found that the transient response to the solar forcing is approximately double the response to other forcings in their preliminary analysis. But this higher sensitivity to a solar forcing would seem to be an interesting result in its own right, and I would want to know more about this analysis, and what simulations were used – was this observed in one, some, most, or all of the CMIP5 models? After all, if adjusting the CMIP5 model *mean*, it would be important to know that this was a general property shared across most of the CMIP5 models.

Comment #4: For anthropogenic tropospheric aerosols, two adjustments are made. One for the nitrate aerosol forcing, and the second for the aerosol indirect effect. SST14 notes that only two models include the nitrate aerosol forcing, whereas half contain the aerosol indirect effect, and so the ensemble and mean are adjusted for these. But it is not clear to me if the individual runs for each of the CMIP5 models are adjusted (and thereby no adjustments are made to runs from models that include the effect), and the mean recalculated, or if simply the mean is adjusted. The line "…if the ensemble mean were adjusted using results from a simple impulse-response model with our updated information on external drivers" makes me think the latter. But if it is this latter case, clearly this is incorrect – if half of the models already include the effect, you would be overcorrecting by a factor of 2 if you adjusted the mean by this full amount (perhaps the -0.06 is halved before the adjustment is actually made, but it is not specified).

Comment #5: Regarding the indirect aerosol forcing, Belloin et al., (2011) is used as the reference, which uses the HadGEM2-ES model. It is worth noting the caveats:

The first indirect forcing in HadGEM2‐ES, which can be diagnosed to the first order as the difference between total and direct forcing [Jones et al., 2001] might

overestimate that effect by considering aerosols as externally mixed, whereas aerosols are internally mixed to some extent. By comparing with satellite retrievals, Quaas et al. [2009] suggest that HadGEM2 is among the climate models that overestimate the increase in cloud droplet number concentration with aerosol optical depth and would therefore simulate too strong a first indirect effect.

Moreover, I am not quite sure the origin of the -0.06 W/m^2. Belloin et al., (2011) suggest an indirect effect from nitrates that is ~40% the strength of the direct effect. So if only nitrate aerosols increased over the post-2000 period, I would expect an indirect effect of ~ -0.01 W/m^2. It seems to me that this must include the much-larger effect of sulfate aerosols, which leads me to my next comment…

Comment #6: Sulfate Aerosols. Currently, sulfate aerosols constitute a much larger portion of the aerosol forcing than do other species (nitrates in particular). I presume that for #5, the indirect aerosol forcing of that magnitude would need to result from an increase in sulfur dioxide emissions. But as per the reference of Klimont et al., (2013) in my last post, global sulfur dioxide emissions have been on the decline since 1990, and since 2005 (when the CMIP5 RCP forcings start) the Chinese emissions have been on the decline as well (only India continues to increase). Rather than the lack of indirect forcing artificially warming the models relative to observations, it seems like it has been creating a *cooling* bias over this period, if you use the simple relationship between emissions and forcing as in Smith and Bond (2014). In fact, since 2005 (according to Klimont et al., 2013 again), sulfur dioxide emissions have declined faster than in 3 of the 4 RCP scenarios. It seems likely to me that the decline in sulfur dioxide emissions over this period (and it’s corresponding indirect effect) would more than counteract the tiny bias from the NO2 emissions.

Conclusion:

Having just done a similar analysis, I thought it important to put the Schmidt et al. (2014) Nature commentary in context. There is enough uncertainty around the actual forcing progression during the "hiatus" to find a set of values that attribute most of the CMIP5 modeled / observed temperatures to forcing differences. However, the values chosen by SST14 do seem to represent the high end of this forcing discrepancy, and it appears that most of authors of AR5 chapter 9 believe the forcing discrepancy to be much more muted. Moreover, the SST14 commentary should not be taken to be a response to longer period, more direct estimates of TCR, such as that of Otto et al., (2013). Specifically, the TCR bias found in that study would be perfectly consistent with the remaining discrepancy and uncertainty present between the CMIP5 models and observations.