Background

Since starting this blog, I’ve been trying to determine the UHI effect based on how changing populations trends correlate with changing temperature trends at various stations. I believe the most successful demonstration of that was here.

Along that path, I stumbled upon the NHGIS, which gives access to aggregate census data. Over the past few weeks I’ve been working with this data to determine any variables that might serve as better proxies for this “UHI” effect.

The Data

All intermediate data, code, and my downloaded NHGIS datasets can be retrieved from here (the biggest package yet).

The temperature data, once again, is USHCNv2.

The data for my variables (e.g. Num Vehicles Availables, Aggregate Family Income, etc.) is from NHGIS. The availability of differents topics can be seen here, and based on the limitation of the data and what I thought could possibly be proxies for land use/UHI, I was left with only a few choices. Notes on how I got from the raw NHGIS datasets to the variables can be seen in the Origins.txt included in the package above.

Ultimately, I found that many of these variables were decent proxies for the UHI effect, but few were independent of each other and most of them did not perform better than population.

However, the Aggregate Family Income of a “place” DOES seem to be a better proxy for UHI than population, which is unsurprising given that economic development typically spurs the surface and land-use changes. Furthermore, the number of workers in Agriculture, Fishing, Forestry, and Hunting seems to be pretty orthogonal to Aggregate Family Income, and also a decent proxy for UHI in its own right. It is negatively correlated, which also makes sense, because a decrease in these sorts of jobs suggests an “urbanization”. Regressions using these these two explanatory variables has led to the best results.

One note on Aggregate Family Income: For determining the magnitude of the effect, I use an inflation-adjusted value for each year, calculated using this site. The inflation-adjusted value is reported in 1970’s dollars. While inflation does not affect the correlation, it can greatly affect the estimated magnitude of UHI based on our model.

The Method (#1)

The first method I use is very similar to the one I used with population previously, but I will reproduce some of the basic points here.

1) For each station, the linear temperature trend (dT) is calculated based on the data from 1970-2000. A station is only included if it reports an annual temperature for at least 7 years in every decade from 1970-2000.

2) Similarly, a trend is calculated for both Income and AgrWork (and other variables) at each station, using the 1970, 1980, 1990, and 2000. The trend is in terms of the log of these variables, so dI and dA (which I will use for short-hand) refer to the log-difference each year.

3) Based on latitude and longitude, we match all close station pairs. A match is determined based on approximate distance in km between the station pairs. If the difference between two stations is less than the “threshold” distance, the pair is included.

4) We then regress the difference between two temperature trends of nearby stations against the difference in log trends of Income and AgrWorkers in those same stations. So we try to find an equation of the form (TempTrend2 – TempTrend1) = a * (logIncomeTrend2-logIncomeTrend1) + b * (logAgrWorkersTrend2 – logAgrWorkersTrend1) + c

The Method (#2)

The other method I used was to simply compare all stations at once. Steps 1) and 2) are exactly the same as above. Then,

3) The U.S. is broken up into 2.5 x 2.5 degree grid cells, and the average temperature trend is calculated for each grid cell. A value for dtAdj at each station is determined, which is the temperature trend for a particular station MINUS the average temperature trend of all other station temperature trends in its own grid cell.

4) The dtAdj for each station is then compared against the resulting dI and dA for that station.

Step #3, is used to remove the spatial auto-correlation that I’ve encountered before, since we adjust each station’s temperature trend by others in the region.

Why have this second method at all? First of all, it allows for comparing all stations at once, rather than over-representing those stations that have more stations clustered nearby. Second, it allows for us to use stations that are more solitary, taking advantage of nearby ones that may have valid temperature data but do not have data for the other variables. In other words, while we are limited to some 400 stations of those that have our Income data AND temperature data, we can use all 800 or so that have at least temperature data to perform our regional adjustments.

Of course, in the event that we do encounter spatial auto-correlation and the Income increases more in certain grid-cells than others, we are almost certain to get an underestimate of the slope, since we’re subtracting the average trend even if the UHI effect be a net positive.

Results

This first table shows the results of running Method #2 on the Raw, F52, and TOB USHCN datasets, regressing dtAdj against dI and dA.

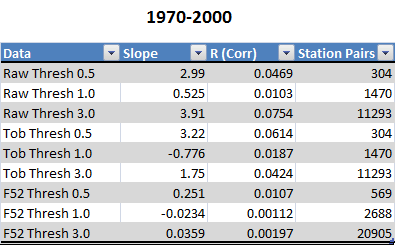

This second table shows the results of method #2, where we perform the pairwise comparisons. I’ve shown the results at various threshold distances for what constitutes a match.

In both methods, there seems to be a fairly robust signal (outside of the F52 dataset). I will continue forward using the TOB set, with more explanation of why in the discussion at the end.

Magnitude of the Effect

At this point, it’s worthwhile to take a look at the magnitude of the effect. Though there are more sophisticated methods for calculating the yearly US anomaly out there, I only needed an approximation here, and so the method I used was fairly simple: use a 5×5 degree grid for the US, calculate the average anomaly for each grid cell, then average the grid cells.

Here’s a graph that shows my resulting anomalies versus that of GISS. (Note that they have different baselines).

They match up pretty well, with the trends fairly close.

Now, in order to calculate the magnitude of the effect, I simply subtract the (coefficient for each variable times the number of years that have elapsed times the annual trend of that variable at that station) from the anomaly for the station.

Clearly, this is going to be extremely sensitive to both the coefficient AND the annual trend of the variable at a particular station. For something like population, the annual trend is pretty straightforward if we have the data. However, with Income it was necessary to adjust for inflation, since an increase in Aggregate Income that is only par with inflation does not suggest economic development.

Regarding the coefficients, I will show the adjustments using the higher-end ( 9.33, -2.046) and lower-end (3.22, -1.4) of our results from above:

On the higher end, we see about 25.4% of our trend due to UHI during this time period, and on the lower end we have about 9.4%.

Discussion

We’re left with a range likely in between 10% and 25% for the U.S. during this time period using the TOB dataset. As I’ve discussed before, it is not surprising that we find a weak to non-existent signal in the F52 adjusted data-set. While it is possible that this is due to perfectly removing the UHI effect, it is also quite likely that the infilling of data has only added noise to dilute the signal. Furthermore, when the temperature readings have been adjusted to match nearby stations, we can hardly expect our pairwise tests to then yield anything meaningful.

To me, the real wildcards here are the inhomogeneity adjustments. On the one hand, we may be getting a correlation here between the the instrument-related adjustments and the economic development near a particular station, in which case we are overestimating the effect of UHI. On the other hand, it may be that adjusting for station equipment type and location moves (and avoiding the pairwise temperature trend adjustments) may in fact increase the signal, suggesting that what we have is an underestimate. This is likely to be my next avenue of research, when I get a chance.