I recently started a small library of functions I use frequently to process downloaded CMIP5 runs on Github. Additionally, there are some processed files I uploaded to that repository, which are the monthly global tas (surface air temperature) text files for a large fraction of available CMIP5 runs.

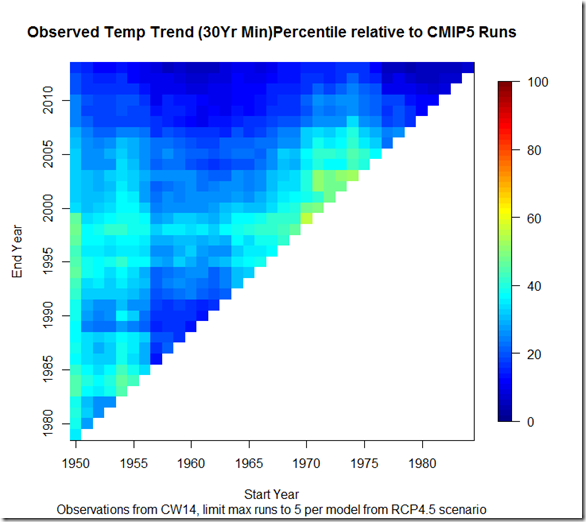

In order to put these to use, I thought it would be interesting to investigate where the “observed” temperature trend appears relative to all CMIP5 runs for every combination of start and end years from the last half of the 20th century (1950) to present (2013). Thus, below I show the “percentiles”, where red values indicate the observed temperature trend is running hot relative to the models (75th percentile means the observed trend is larger than 75% of the model runs over that same period), and blue indicates the observed temperature trend is running cool relative to the models.

The list of models included can be found here. In order to avoid too strongly overweighting certain models relative to others, I have restricted the number of runs to include per model to a maximum of 5. The temperature observations come from the Cowtan and Way (2014) kriging of HadCRUTv4. Here is the result if we look at all trends over this period that span 15 years or more:

From a glance, it appears that there is a good deal more blue than red here, suggesting that a lot of the observed trends are running towards the cooler end of models. Some recent papers (eg. Risbey et al (2014) ) have been looking at the “hiatus”, and in particular 15 year trends, arguing that the most recent low 15 year trend doesn’t necessarily provide evidence of oversensitive CMIP5 models because these models cannot be expected to simulate the exact phasing of natural variation. They point out that at points in the past, the observed 15 year trends have been larger than that of the models. There is some support for that in the above graph, as shorter trends ending around 1970 or in the 1990s tended to be towards the higher end of model runs. Moreover, I think that anybody suggesting that the difference between observed and modeled trends during the “hiatus” was due solely to oversensitivity in models is misguided.

That being said, I think if we move past the “models are wrong” vs. “models are right” dichotomy and onto the “adult” question of “are models, on average, too sensitive in their transient responses?” we can agree on the following: given the apparent large contribution of internal variability to 15 year trends, seeing these observed trends in both the upper and lower percentiles of modeled trends is to be expected, even if models on average were too sensitive in the transient by, say, 35%. In the case of models being too sensitive, we would simply expect to see more trends in the lower percentiles than higher percentiles, as above.

However, I think a more striking picture emerges if we look at only 30-year or longer trends, where some of the higher frequency variations are filtered out:

Here, we see that depending on start and end year picked, the observed trends generally fluctuate between the 2nd and 50th percentiles of model runs. This seems to be strong evidence that the CMIP5 runs are, on average, running too hot. Otherwise, one might expect the observed trends to fluctuate more evenly above and below the 50th percentile. Whether they are running too hot because of incorrect forcings or oversensitivity cannot be divined from the above figure, but I think it provides stronger evidence that the models are running warm than the single most recent 15-year period.

Script available here.

While you’re looking at trends over multiple time scales, you might be interested in this:

https://sites.google.com/site/climateadj/multiscale-trend-analysis—hadcrut4

Comment by AJ — September 19, 2014 @ 12:55 pm

Wow, the graphical presentation from both Troy and AJ are cool! I like how you have all trends for all periods included in one chart. Here is my more novice take (i.e. only ending in 2013) http://www.cato.org/blog/current-wisdom-observations-now-inconsistent-climate-model-predictions-25-going-35-years

-Chip

Comment by Chip Knappenberger — September 23, 2014 @ 9:42 am

Thanks Chip, although I have to credit Nick Stokes, whose has been maintaining this type of trend chart for a while now.

Comment by troyca — September 24, 2014 @ 6:58 am

It should be clear that CMIP5 runs are, on average, running too hot, because they then respresent more radiative effects and no more interanual variablility, There you can use another approach.. you use the standard deviation of Cowtan and Way corrected Data (here: http://www-users.york.ac.uk/~kdc3/papers/coverage2013/had4_krig_annual_v2_0_0.txt) and run 10 runs of random noise with the same standard deviation.

So i download Cowtan and Way data and CMIP5-RCP4.5 mean in 1861-2013, replace their anomalies in mean of 1861-2012 to zero and adding the noise to the mean to CMIP5. And uses low and max of noise.

It will look like this: http://www.directupload.net/file/d/3765/nvr9cti3_png.htm

It looks similar to http://www.met.reading.ac.uk/~ed/bloguploads/AR5_11_25.png but there is more shown then RCP4.5 alone

So we can take another approach, because interanual variability is not really totaly noise. We now we assume, that interanual variability is mainly due ENSO and to make it a bit easy we taking MEI-Index replaced as Juli-Jun-Values and then fit it down to value of standard deviation of observed Temperatur and replace this values to CMIP5-RCP4.5 mean

Looks like this: http://www.directupload.net/file/d/3765/soiguhxj_png.htm

That would imply, that CMIP5 could be to sensitiv to radiativ forcing, so if i pull down CMIP5-mean by arround 15% (very simplified way) it will now nearly perfect perform to Hadcrut4 by Cowtan and Way.

Or see here:http://www.directupload.net/file/d/3765/9vsm4wj4_png.htm

But its only is in the near of truth, if all forcings are correct predict in RCP45, but there are doubts, see G. Schmidt et al (2014). So its more possible, that predict forcing was to large…

Just my 5 cents on this topic

Comment by Christian — October 4, 2014 @ 7:27 am

Hi Christian,

Thanks for stopping by.

Let me respond to what I think you are suggesting are some possible reasons for the apparent overheating of CMIP5 models:

1) Interannual variability – I don’t believe there is much explanation in variability on the timescale of a few years, since the 30-year trend picture should mitigate the effects of this type of variability on trends. However, some have advanced that longer-term, decadal variability may be responsible for some of the discrepancy in the trends over the last 15 years, or perhaps up to 30 years. Let us consider a couple possibilities here:

a) Unbiased trend in the CMIP5 models, and correct magnitude of decadal variation – under this scenario, I would expect the 30-yr observed trends to fall somewhere between the 16th and 84th percentile of model runs most of the time, spending a reasonable amount of time both above and below the 50th percentile. However, as we can see from the graph above, the observed trends tend to almost always fall between the 2nd and 50th percentile of model runs.

b) Unbiased trend in the CMIP5 models, but underestimate of the magnitude of decadal variation – under this scenario, we might see the 30-yr observed trends branching out to the 5 to 95% percentiles (or further), but we would still expect to see the observed trends running high relative to models (well above the 50th percentile), in addition to below that 50th percentile. However, again, we see that no matter what start or end date you pick in the last 65 years, the 30 yr observed trend tends to fall below that 50th percentile.

Given this, I tend to reject (a) and (b) as likely possibilities, suggesting some bias in the CMIP5 warming trends. Again, I am not entirely sure what you mean by “radiative effects”, but it seems this could refer to discrepancies in either the radiative forcing or the radiative restoration (that is, sensitivity), both of which could in theory explain the warming bias.

2) Radiative forcing – it is possible that over this time period the extra warming is due to a more positive radiative forcing in CMIP5 models, although AR5 tends to suggest the opposite…the strength of the negative aerosol forcing was reduced, thereby suggesting that observed forcings may have been more positive than those simulated by the CMIP5 models. If this is indeed the case, the CMIP5 models should have been biased cooler, rather than hotter, although I will grant that there is still a significant amount of uncertainty surrounding the aerosol forcing.

3) Sensitivity to forcing – to me, for the reasons discussed above, it seems highly unlikely that #1 & #2 provide a complete explanation for the models running hot relative to observations over almost all 30-year trends shown in the figure, so I tend to think that CMIP5 models are probably, on average, too sensitive.

Please note that I do use the Cowtan and Way (2014) dataset in developing the figures above. That being said, I think your method here has significant difficulties, because you seem to be suggesting the SD from a time series that contains a largely forced response is in fact the SD of the “noise” (variability) component. Morever, it is not clear if you are using an AR model or simply white noise? Clearly the former is more appropriate, but in any case your results might look “okay” simply because these errors are somewhat counterbalancing each other? In any case, this step is largely unnecessary given my approach above, which contains the individual members of the CMIP5 ensemble rather than just the mean, so in theory the “noise” component is already included (Obviously you could argue that it is better to include “real-world” variability rather than modelled variability if these are significantly different, but it is not clear how one would accurately separate the forced from the noise component in observed temperatures without making several other assumptions and a more sophisticated approach).

The graphs you link to, particularly to the one in IPCC AR5, are interesting, although I should note that it is not a very stringent test if the CMIP5 models can “pass” simply if the observations fall within the 95% confidence interval when they are baselined from 1986-2005! I may do a quick demonstration of the lack of power in this test soon, since I think it is worth pointing out.

I performed a similar analysis on the hiatus in this post, adjusting for ENSO effects as well. Your 15% number sounds reasonable (implies a TCR around 1.5 K?), although obviously I got a slightly lower number.

I agree it is possible, although I did a post on Schmidt et al (2014) at the time expressing why I was skeptical. Moreover, it appears

Kühn et al (2014) also suggests a *positive* (rather than negative) RF contribution from aerosols in the real world over the time period of the hiatus. I think it is also important to note that Schmidt et al (2014) was looking at a single time period with a trend less than 30 years…it is my opinion that the argument for the oversensitivity of models is bolstered when looking at the collection of 30-yr trends shown in my picture above.

Thanks!

-Troy

Comment by troyca — October 4, 2014 @ 12:36 pm

Hi Troy,

To CMIP5 and Interanual Variability:

Investigate Trends alone by made you above, can lead to errors, because CMIP5-mean is radiative forced alone, while Hadcrut4 is radiative forced and have much interanual variability, but most of it counters radiative forced response by vulcanic erruptions. The very strong positive Phase of ENSO falls within vulcanic episod. So Hadcrut4 is then often counter by it and can not fully response to vulcanic response. This distorted your Trend, you see it on your first picture (15y-Trend) where the Trends is nearly due 80th percentile at two time-scales. In a Full drawn Picture, the Trend(CIMP5) will be first to high and then to low in response to counter of vulcanic epiosodes by ENSO.

The real Anomalie is only the Hiatus. Let´s try another look for it, i use are 20y-running mean trends since 1863. (from 2013 backward)

Here:

http://www.directupload.net/file/d/3766/9y5qmkq4_png.htm

And compare Fulltime(not Trend):

http://www.directupload.net/file/d/3766/bub2mn3i_png.htm

So pherhaps there a few arguments we can give:

1: Overestimate of Trend by CIMP5 for 1950-1960 is mainly due 1940s Anomalie in Hadcrut4

2. Overestimate and different Timing of Trend by CIMP5 for 1970-1980 is mainly due early strong model vulcanic impact between 1964-1970 which is lead to an abnormal recover-Trend response compares to Hadcrut4

3. Underestimate of Trend by CIMP5 for 1990-2000s because ENSO counters the impact of vulcanic activity these period and reduce the related cooling. The Impact of vulcanic activity on CIMP5 Trend is well seen in den buckle coverage this time.

So i found its to easy to say, it have to be above and below the 50th percentile.

To noise:

I simple use white noise, because its much easy, for models that not complete true, but their physical interanual variability looks often like random noise. But that was only for demonstrate that are forced noise (ENSO) can lead to break outside of random noise. And its true noise will counterbalancing each other, but that nearly also true for CIMP5-mean. Or you running a EBM to the Forcing of RCP45 and you get very the same results to the mean of Models. And thats the point, you can use Model-Ensemble and the most didnt give neary rigth results, perhaps a low number which has coincidentally very similar noise which you have on Hadcrut4. I testet it 2 year ago, find 2 or 3 members but last year i lost it because HDD was crashed.

To AR5 graph:

Yes i agree, but it have also to note, that variability we have observed to now, have not do mean that is the full one. Or just look and the ENSO, we are now set on the longest period without El-Nino since CPC will record it or see here: http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ensoyears.shtml

To ENSO-Adjust and Hiatus:

TCR is perfored to 1.45K and ive read your approach and its interesting to see, PDO as a long lived memory to ENSO. Next time i will try it with PDO..

To Forcing:

Yes i agree, there are many doubts about forcing in last decade and their is no really evidence in which direction is it biased. And there is also more, we here not include effect from other variabilities e.g WACCy(Judah Cohen et al 2014) or increased heat-uptake to the deeper Ocean (Balmaseda 2013)

And thanks also for fast reply

Comment by Christian — October 5, 2014 @ 2:05 am

Or look at the Trends of saison (DJF,MAM,JJA,SON) in GISSTEMPS

For “Hiatus” 1998-2013 ( in ” because there is warming since 1998):

DJF: -0.004K/y

MAM: +0,006K/y

JJA: +0,006K/y

SON: +0,016K/y

For real Hiatus 2002-2013 (because there is no more a “linear trend”)

DJF: -0.014K/y

MAM: 0K/y

JJA: 0.008K/y

SON: +0.004K/y

The Hiatus is clearly mainly due Wintertemps. Because there is beside ENSO some effects of WACCy due Winter and Spring. Summer and fall are not so strong related to WACCy, because there is no more stratospheric vortex obove Arctic at these times. Also less effected by ENSO, because ENSO is mainly a Wintertime (and lag zu Global Temeprature) phenomenon.

The divergence between Winter and Spring leads to conclusion, that mainly in Winter have to be more then ENSO, so in my opinion there is a WACCy-Impact on Wintertime which is also contribute to Hiatus.

This could imply, the Models have are saisonal bias, more then a sensivity bias or both.

Comment by Christian — October 5, 2014 @ 3:42 am

Hi all, maybe it’s interesting not only to focus on the globals and to compare GISS or maybe CW+HadCRUT4. When looking for corrections related to decadal and/or multidecadal variations the difference between NH and SH- Temps ( ITA) could be very interesting as they were refered here: http://www.atmos.washington.edu/~dargan/papers/fhcf13.pdf . In chapter 6 of the paper you’ll find a dicussion of the (in CHIMP5 not replicated) “drop” in the late 60s and the thesis that this was internal variation, perhaps MOC. In the years after 2000 the models predict for ITA a highly linear trend with a slope of 0,17K/ decade for RCP8.5 and this seems to me a little bit unrealistic because the ITA will climb up to 2100 up to 1.8 deg. K. What about to compare the trends of the ITA from CHIMP5 to the observations?

Comment by Frank — October 6, 2014 @ 10:42 am